Vivliostyle advertises itself as a “publishing workflow tool.” It is a great tool but this isn’t a terribly accurate or descriptive byline since publishers can’t really use it without changing everything else they do. Hence my preference to refer to it as an HTML pagination engine (although I used to refer to this process as Browser Typesetting). It can be part of a publisher’s workflow but only after they have transformed everything else they do to an HTML-first workflow.

On this point, I think Vivliostyle have their sales pitch wrong but it is hard to know how they might do it better since Vivliostyle imagines, as do many other tools, a radically different way of doing things to how publishers operate now. Vivliostyle is one necessary part of an approach that is, in effect, a paradigm shift in how publishers work. This ‘new way’ of working is exciting and transformative, but it is also hard to capture paradigmatic changes in a few catch phrases. So, to understand the value of Vivliostyle, you first have to understand how publishers work now, why it is a terrible way to work, and what HTML-first workflows can offer. Once you understand that, you can see how exciting Vivliostyle could be for publishing.

So… what does it do? Vivliostyle is the latest in a long list of tools that, among other things (more on these in later posts), can enable the transformation of HTML to PDF. These tools have been typically used to produce PDF for printing – book formatted PDF. There are a few of these softwares out there but most are proprietary and only a handful of them have been Open Source (notably BookJS that I was involved in a long time ago, and CaSSius). Vivliostyle is the latest in this family tree, the root category of which I would describe as an HTML Pagination Engine.

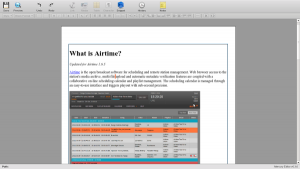

What it does is this – it takes an HTML file and paginates it. It flows the HTML through the ‘boxes’ (pages) you have defined and lays the content out nicely in each box, one box after the other, flowing all the content through it with the right margins (and more, see below). So instead of scrolling through the web page, you page through the document on screen. Vivliostyle converts the HTML into ‘pages’. It is an HTML pagination engine.

It is then possible to do a lot more with these pages, including adding page numbers, using CSS (the style rules used by browsers) to define the look and feel of text and images(etc), adding headers, notes and footers etc. In other words, you can add to each page everything you need to make the result ‘look like a book’.

You can do this transformation in the browser because Vivliostyle is written in JavaScript. If you want to see this in action, check out their demos page. For example, look at this page showing the raw HTML of a book by Lea Verou (published by O’Reilly), then open this page (give it a minute to render). Now right click and print (best in Chrome or Chromium browsers – you may need turn margins off and background images on when printing). The result should give you a one to one co-relation of the paginated content in the browser (which is HTML) to the paginated print-ready PDF.

Since the browser can also print PDF, then you can take this newly styled ‘book looking’ HTML and print it to PDF from the browser. From this, you have a book-formatted PDF that is ready to send to the printer to be printed and bound. I’ve worked this way for many years and printed many books this way for organisations from the World Bank to Cisco. The system works and the printed books look great. Vivliostyle is, at this point, the most sophisticated open source tool for doing this.

It is pretty amazing stuff. From HTML to PDF to printed book at the press of a button. Magic. This process is also catching on. Hachette produce their trade paperbacks using an HTML-to-PDF rendering engine with styling via CSS. So it is no longer a process reserved for small experimental players.

So, why is this interesting? Well, it is interesting almost without an explanation! Transforming HTML in this manner seems pretty magical and it’s kinda neat just to look at the demos and marvel at it. However, the really interesting part comes into play when we start talking about workflows. This is where Vivliostyle can be part of transforming publishing workflows.

As a publisher, if you were to take Vivliostyle ‘out of the box’ it would not be of much use to you. How many web pages do you have that you want to turn into PDF? Not many, if any. How much of your book or journal content in your current workflow is stored in HTML? Probably none. In all likelihood, HTML in your business is restricted to your website and, perhaps, EPUB if you produce them (EPUB is just a zip archive containing HTML files and some other stuff). But in most publisher’s workflows the EPUB is an end-of-line format. Publishers take the completed copy in MS Word, or (sometimes, regrettably) PDF, and send it to a vendor (typically in India) to transform into EPUB and send back. So chances are, HTML is not the format being used as the basis for your book or journal workflows (apart from possibly being an end-of-line format).

As a publisher, you don’t have manuscript copy in HTML, so Vivliostyle is not going to fit snuggly into your workflow. In order to utilise it, you need to transform the way that you work. You have to start working in HTML or, more difficultly, work in some format that can transform into a very tightly controlled HTML output so that Vivliostyle can work with it.

I don’t like the latter style of workflow. This is where you work in something like XML (of some sort) and then transform to HTML at the end of the process. It’s ugly workflow, not friendly for non-techie users and typically full of workflow redundancy. If you want good HTML, then just work with, and in, HTML. This comes with additional benefits since from good HTML you can get to any format you want PLUS you have the advantage of now being able to move your workflow into the browser. And this is where Vivliostyle fits into a toolset, an approach, that could transform how you work – the HTML-first production environment.

The current ‘state of the nation’ in publishing is pretty terrible. Most publishers use MS Word docx as their document format, and Track Changes and email are their primary workflow tools. This means that there is a single document of record – the collection of Word files. These are shareable, in the sense that you can email people copies, but you cannot have multiple people simultaneously accessing them at the same time. In effect, the MS Word files are like digital paper in the worst possible way. There is only one ‘up to date’ version and only one person that can work on that version while they hold onto the files. There is no easy way to follow a document’s history, revert to specific versions, or identify who made what change when. Further, there is no inherent backup strategy built into standalone MS Word files. Everything must be done manually. That means organizing the files in directory structures with naming conventions that are known by only those in the know (since there is no standard way of doing this). There are also problems with email as a collaboration tool. Did it send? Did they get it? Did they get the right version? Plus there is no way of understanding the status of the documents unless you ask via email or there is some other system that is manually updated for status tracking. The system is not transparent. Further, changing workflows when using systems like this, even for small optimisations, is quite difficult and the larger the team the more difficult it gets.

Additionally, using Word and email like this is really placing unnecessary gateway mechanisms on the content. If I have the up-to-date versions then you can’t have them or work on them. There is really only one copy and only one person can work on it. That makes for linear workflows and strongly delineated roles. No one can ‘jump in and help’ and it is very difficult to alter the linearity of the process or redistribute the labor to achieve efficiencies.

If publishing is to move on, then workflows need to migrate to the browser. With browser-based workflows, there is no need to have multiple copies of the same file, versioning is taken care of as is document history, it is easy to add and remove people from the process, and labor can be better distributed over both roles and time to create more elegant, efficient, workflows. I wrote about this in an earlier post and will write more in posts to come since there is a lotmore to it. But suffice to say that publishing workflows to the browser is a little like ‘sucking all the gaps’ out of the current Word-email workflows (plus a whole lot of other benefits). No more checking your Inbox while you wait for status updates or someone to send you the files so you, and you alone, can work on the next little part of the process while everyone else waits. Additionally, there can be full transparency as to what needs to be, has been, and is being done (and by whom). There is the opportunity to break down larger tasks into smaller tasks and have them all in play concurrently. There is the opportunity to share the same tools and hence enhance communication and redistribute the work to where (who) it makes most sense. There is so much to be gained.

This is not to say that browser-based workflows are ‘anything goes’ workflows (which is what most publishers think this way of working amounts to). You can still assert rules of who has access and when. But… in my experience, when you migrate workflows to the browser then publishers start rethinking how they work and you often hear comments like “but we don’t need to do it like that anymore’…They then start designing radically better workflows themselves.

So, the point of all this is that Vivliostyle by itself does not achieve this. It is not, in itself, a workflow tool for publishers. You first need all the other things that enable an HTML-first workflow to be in place and once they are there, then you can utilize Vivliostyle to transform the HTML (at the push of a button) to the PDF you need for printing. That is the radical improvement Vivliostyle can offer. Cut out the file conversion vendors and render the content according to templated style sheets (automated typesetting can produce beautiful results). This means you can check what the book will look like at any moment, plus the CSS stylesheets you use can also be included in your EPUBs (also rendered at the push of a button since the original content is already in HTML, the content filetype for EPUB) so your printed book and the EPUB look the same.

So, Vivliostyle is a necessary tool for HTML workflows and with an HTML workflow you will radically improve what you do.

This is why Vivliostyle is important to publishers but you cannot consider it isolation. You must consider it with regard to migrating to an HTML first workflow. If you migrate to this kind of workflow then not only will you experience the efficiencies described above but your organisational culture will be transformed and the types of content you can then produce will become a lot more open ended. This is the vision that Vivliostyle, and other tools that enable HTML-first workflows (including those developed by UCP and Coko), are imagining and building towards.

Dear reader, out of principle, I do not use proprietary social media platforms and networks. So, if you like this content, please use your channels to promote it – email it to a friend (for example). Many thanks! Adam