I get asked a lot how many platforms are there built on top of PubSweet. It can be quite difficult to understand this by looking at the Coko website. So here goes (we might add to the below and put it on the Coko site).

Hindawi

Hindawi is currently considering names for the journal platform it currently has in production, plus they are building more than one platform. First up is their journal platform, currently in production for their first journal. It is a low volume journal, with 20 submissions so far and 1 article which has gone through the entire process and been accepted for publication.

Hindawi are looking to make the platform multi-tenanted – which is a often misused/misunderstood phrase, but essentially it points to a strategy for adapting the software so that one organisation can run multiple journals from the same (singular) install/instance. Hindawi then will be going live with the second journal in the near future. They are leading the charge in the Coko community on this multi-tenanted approach and we continue to learn a lot from this amazing team of folks.

On top of this Hindawi are building a QA platform on top of PubSweet. All of which is open source and available to whoever wants it. They went live within a year of beginning to build. Notably the first to go into production with a PubSweet Journal platform.

After gaining this experience (building their first PubSweet Journal platform) they scaled their team up to 18 people working on different PubSweet developments.

eLife

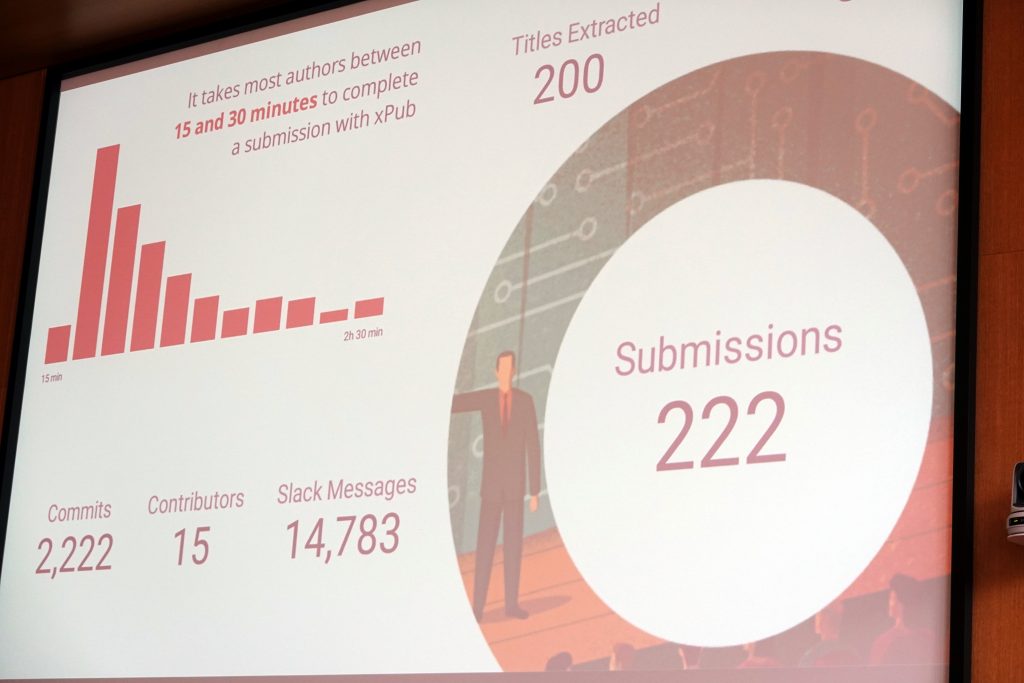

eLife have a special issue where they wish to replace their current platform – EJP – but in phases. So they have been working closely with the EJP team to replace the workflow step by step. The first step has been to replace the submission workflow. Now 60% of all submissions go through their PubSweet submission component and then the article is transitioned into EJP via an EJP API (recently built). The content is transferred between the two systems using a MECA bundle, representing the first time MECA has been used in the world in a live use case. The MECA component is, of course, is open source and available to anyone building a PubSweet platform. They went live with the submission app in just over a year of beginning to build.

eLife is building out their entire workflow as a series of inter-connected PubSweet apps. This means you can grab one part of their system and integrate it in your workflow. Its an interesting strategy.

The cool thing about the Coko community is that we have a variety of approaches to similar problems so it will be interesting to see how attractive the multiple app proposition is to others. They will name each standalone app with the prefix Libero (eg Libero Publisher, Libero Review etc). All is open source.

It has to be noted that EJP’s position is a very enlightened one and fantastic to see a forward-looking vendor working with a Coko community member. I am sure this is going to work out in their favor going forward. I think the number of folks working on these platform components for eLife is maybe 6-10 … will ask Paul and check.

EBI

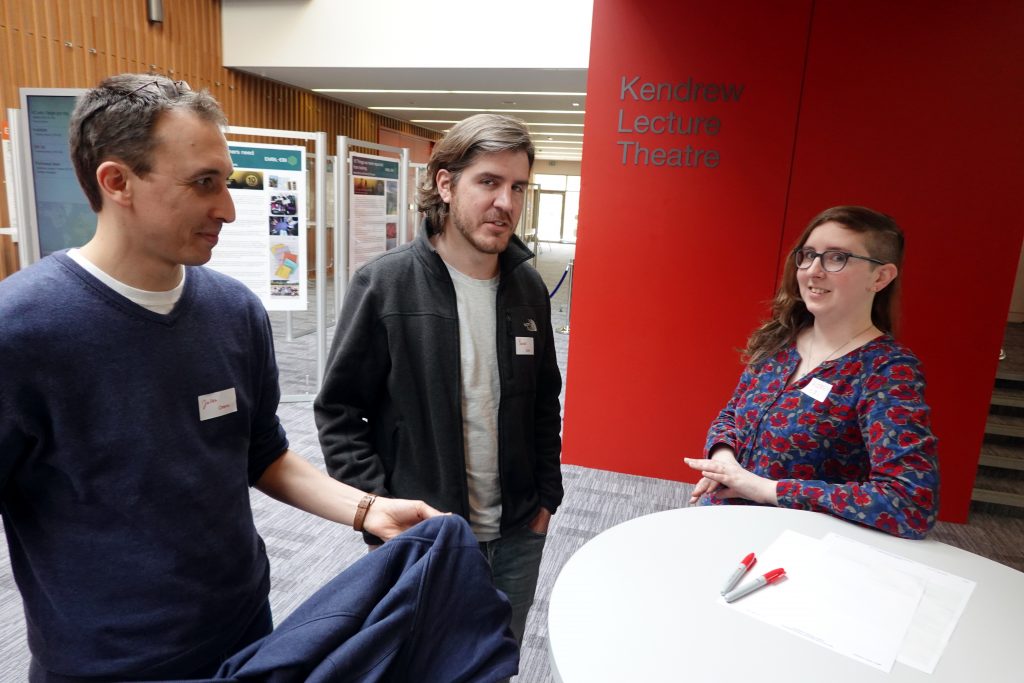

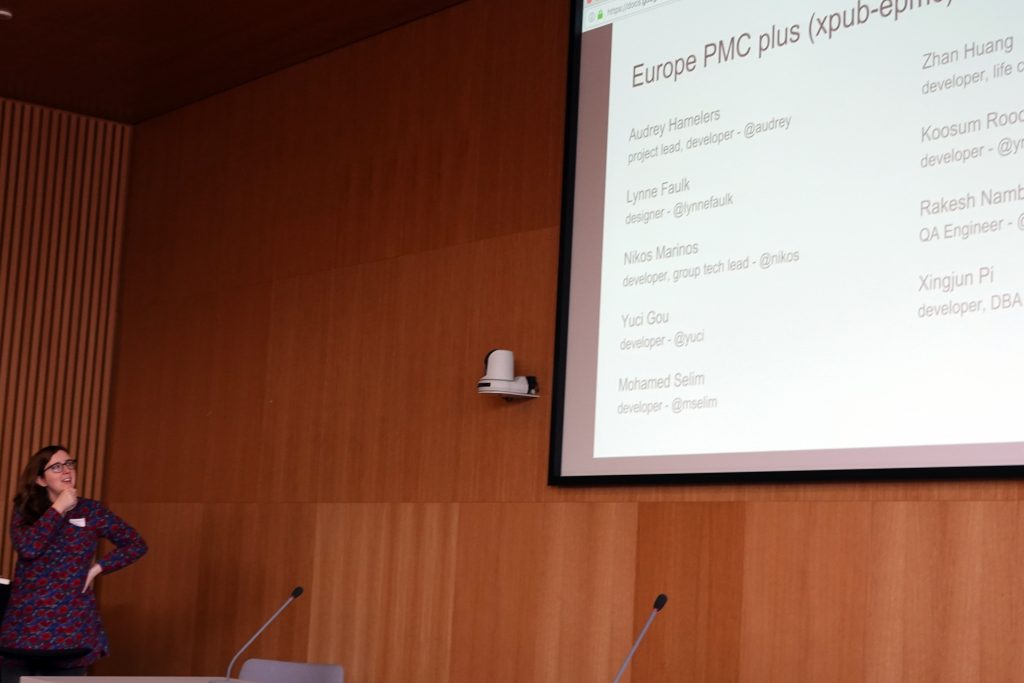

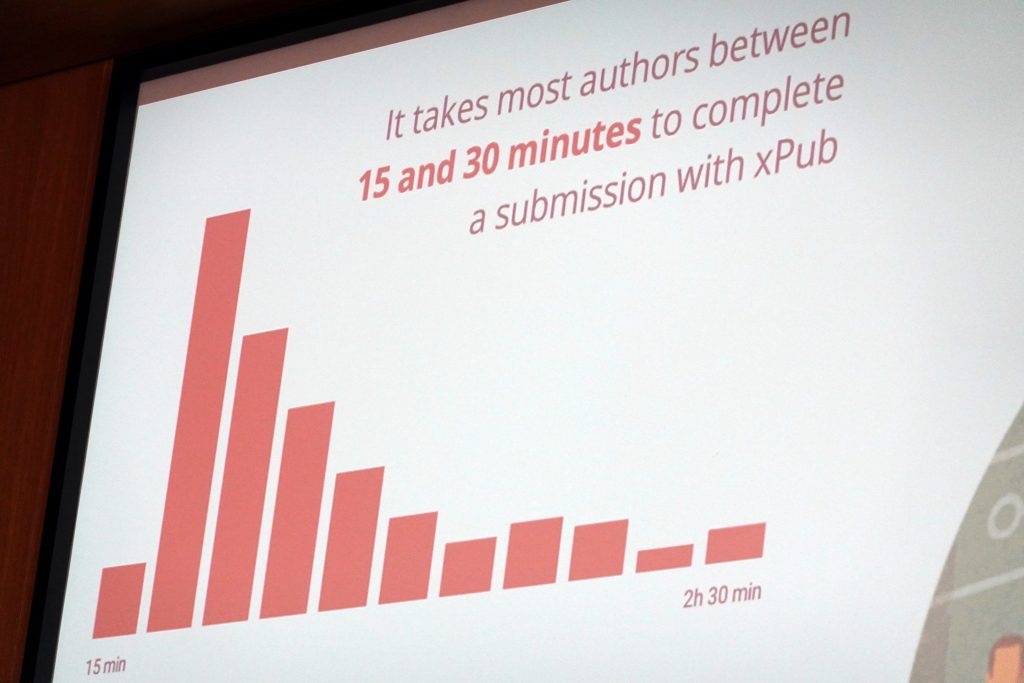

Lead by the very awesome Audrey Hamelers, the EBI (European Bioinformatics Institute) platform is going live in May with their system. They are intending to flick the switch and go from 0 to 100. The platform looks awesome and it is essentially a multiple source post-publish QA system for content aggregation (pushing to EPMC). It supports manual input through a submission wizard as well as batch FTP upload/submission. Although built specifically for their workflow it is conceivable the platform could be used for any workflow requiring post acceptance/production QA.

They have done some cool stuff with annotations using Nick Stennings Annotator, to QA XML. They are also using XSweet for docx to HTML conversion and a whole lot of other interesting tech. EBI have built this extremely quickly, with most of it done in a couple of months. Other priorities got in the way and they had to pause for a few months, but even so, if they go live in May it will be done in under a year from starting. I think they have a small dev team of about 3-5.

EBI has most of the Coko community meets. Awesome folks. All open source.

Editoria

Editoria is developed by the Coko team. A fully fledged post-acquisition book production workflow. It was developed with the University of California Press and the Californian Digital Library. Editoria is now used in production by UCP, ATLA, Punctum Books and Book Sprints. There is a fledgling but healthy community evolving around the product as well as a forthcoming hosting vendor agreement (tba). All open source. Editoria has a small team of (currently) 2 devs working on the core Editoria platform, however much more horsepower is added when you consider dev done on CSS stylesheets, paged.js, XSweet (docx to HTML conversion) and Wax (editor). So really the team is more like 6.

Micropublications

The wonderful wormbase crew commissioned the Coko team to develop a micropublications platform for their micropublications.org project. It follows a simple micropubs workflow which has been designed and built to allow for its re-purposing by any micropubs use case. When it gets down to it however the line between a journal workflow and a micropubs workflow (even tho micropubs is a broadly used and misused term) is a little imaginary. The two look very similar, the real difference is the scope of material submitted and the intention to process it quickly. This platform could probably also be used for journal submissions.

It has been in active dev for a year. Unsure when we will go live, it will probably be a progressively live situation with the initial switch being thrown sometime this year. All open source. Currently one (extremely productive) Coko dev.

Aperture

Another micropubs platform, dev just just begun with one person. It is a platform built for the Organisation of Human Brain Mapping. Awesome folks. I did a workflow sprint with them last year. Cool people and this will be a cool platform. Most interesting is their desire to publish living entities eg Jupyter Notebooks. Super interesting. All open source.

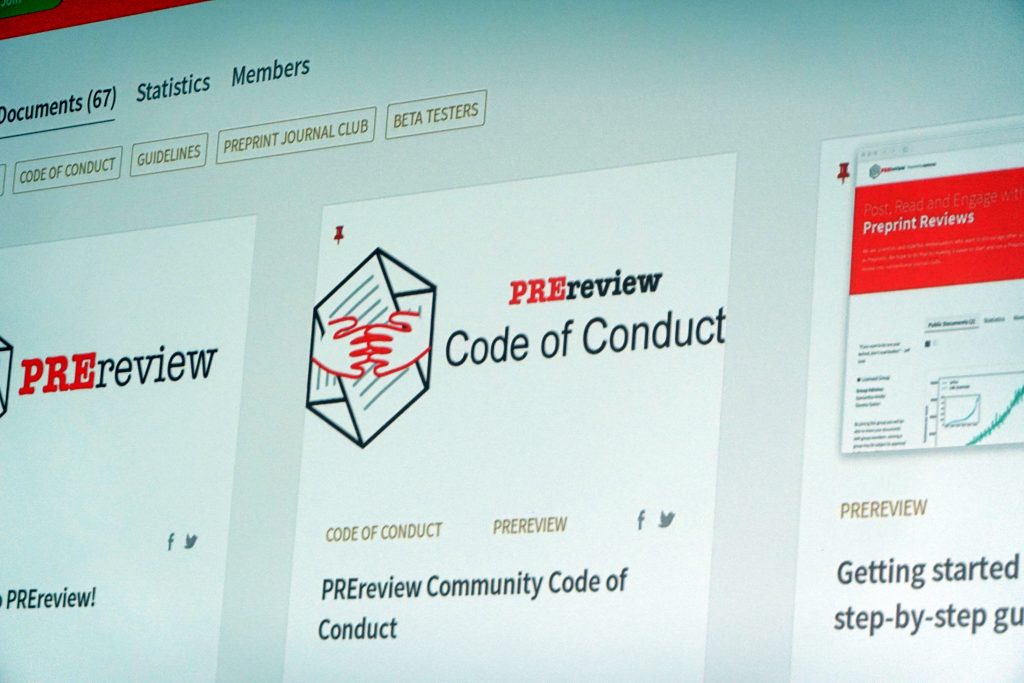

Pre-Review

Built by the pre-review folks and most specifically by Coko friend Rik Smith Unna. Great chap. This is a pre-print review system built to replace their current system that was built ontop of Authorea. All open source. One current dev (Rik).

Digital Science

A new addition – their innovations team is building a very interesting awards system. That in itself isn’t so exciting but their idea is to build a system with configurable workflows. You model a workflow, export, and then the PubSweet app is automatically compiled to suit. Very ambitious and very speculative. A lot to be learned from this and the good thing is that it is all open source and the DS folks are great to work with. Looking forward to see how this evolves. I think there is one developer currently working on this.

Xpub-Collabra

Xpub-collabra is a journal platform the Coko dev team have developed with Dan Morgan from the Collabra Psychology journal. We have put one year dev into it but with just one developer working on it. I’ve decided to pause dev for a little while so we can accelerate other efforts. Our platform dev team is small and we need to maximise resources, and as eLife and Hindawi have Journal offerings in the mix, we have less urgency to develop this platform at the moment. Though we aren’t abandoning it …stay tuned. (One interesting avenue is to collaborate with orgs with dev resources to finish this off – if that sounds like you, give me a call).

And…

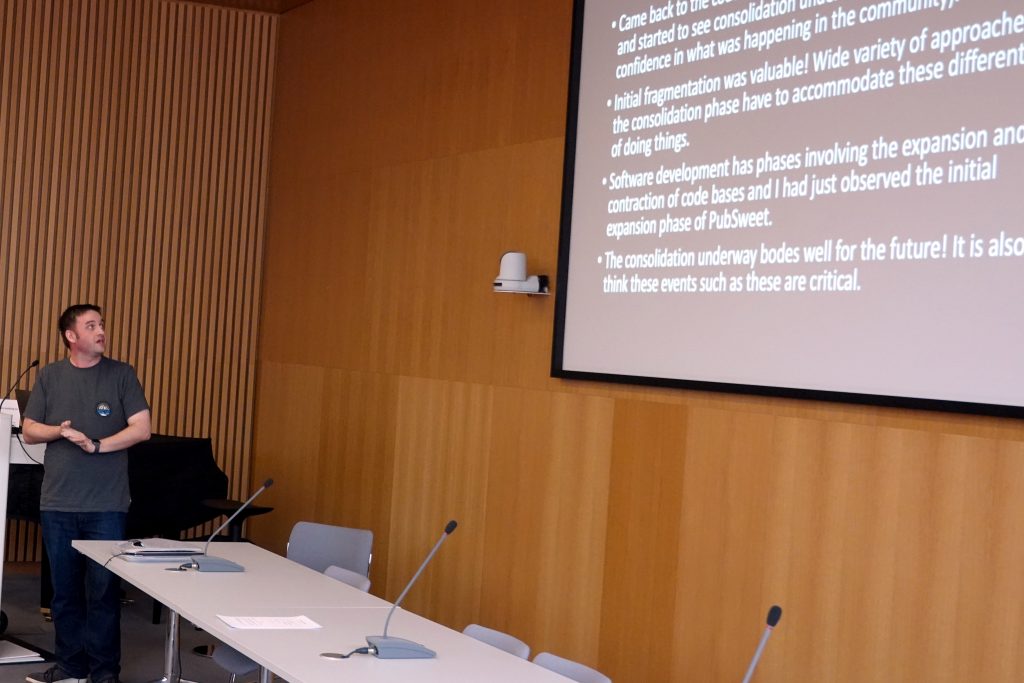

There are a number of projects in the wind. A pre-acquistion monograph workflow, emergency medicines workflow and some other stuff. Plenty going on…consider this though… although PubSweet has been in development for 3 years, we only started building the community a year ago. Already 9 platforms are being built, covering a variety of fascinating usecases and 3 of which are now in production, 2 of which started building less than a year ago… needless to say, watch this space.