The renderer

Note: this is an early version. It has been cleaned up some, but is still needing links and screenshots…. Apologies if the rawness offends you 🙂

This series is skipping around the toolchain, depending on what’s most in my mind at the moment. Today it’s file conversion, otherwise known as ‘rendering’. This is the process of converting one file type to another, for example, HTML-to-EPUB or Word-to-HTML, and so on.

It’s important to have file conversion in the book production world because we often want to convert the HTML to a book format – like book-formatted PDF, or EPUB, mobi and so on, or to import into a new document existing content contained in a file like MS Word.

Manual conversions

It is, of course, quite possible to do all your file conversion manually.

Should you wish to convert HTML into a nice book-formatted PDF, one possible strategy is to go out to InDesign or Scribus and lay it all out like our ancestors did as recently as 2014. Or, if you want to convert MS Word, for example, to HTML, you can just save it as HTML in Word… Yes, Word copies across a lot of formatting junk, but you can clean it up using purpose-built freely available software (such as HTMLTidy and CleanUp HTML), online services (like DirtyMarkup),or a handy app (such as Word HTML Cleaner)…

Manual conversion is not too bad a strategy, as long as it doesn’t take you too long, and it is often more efficient and faster than those convoluted hand-holding technical systems which promise to do it for you in one step. Despite the utopian promises made by automation… you often get better results doing the conversion manually.

I sometimes hear people in Book Sprints, for example, complain something to the tune of “why can’t I just click a button and import part of this paragraph from Wikipedia into the chapter, and then if the entry is updated in Wikipedia, I can just click the button again and it will be updated here”…

I try not to sigh too loudly when I hear this kind of ‘I have all the solutions!’ kind of ‘question’. Some day that may be feasible, but in the meantime, all the knowledge production platforms I have built have an OS-independent trans-format import mechanism which allows those handy keyboard shortcuts ‘control c’ and ‘control p’… sigh. Don’t knock copy and paste! It can get you a long way.

You can also build an EPUB by hand…

But, who really wants to do any of this? Isn’t it better to just push a button and taaadaaa! out pops the format of choice! (I have all the solutions! haha).

I think we can agree it is better if you are able to use a smart tool to convert your files, and the good news is that within certain parameters and for loads of use cases, this is possible. But don’t under-estimate the amount of tweaking for individual docs that might, at times (not always), be required.

Import and export are the same thing

The process of ‘importing’ a document is also sometimes known as ingestion. Before delving down into this, the first gotcha with file transformation is to avoid thinking about import and export as separate technical systems. That can, and has, caused a lot of extra work when building file conversion into a toolchain.

Both import and export are, actually, file conversion. The formats might differ, import might solely be Word-to-HTML in your system and the export HTML-to-EPUB. However, the process of file conversion has many needs that can be abstracted and applied to both of these cases. A quick example – file conversion is often processor and memory intensive. So effective management of these processes is quite important, and in addition, fallbacks for errors or fails need to be managed nicely. These two measures are required independent of the filetypes you are converting from or to. So don’t think about pipelining specific formats, try and identify as many requirements as possible for building just one file conversion system, not an import system plus an export system.

Ingestion

In importing documents to an HTML system, the big use case is MS Word. Converting from MS Word is a road full of potholes and gotchas. The first problem is that there is no single ‘MS Word’ file format, rather there are many many different file formats that all call themselves MS Word. So to initiate a transformation, you need to know what variety of MS Word you are dealing with.

Your life is made much easier if you can stipulate that your system requires one variety – .docx. If you do have to deal with other forms of Word, then it is possible to do transformations on the backend from miscellaneous Word file type X to .docx and then from .docx to HTML. Libreoffice, for example, offers binaries that do this in a ‘headless’ state (it can be executed from the command line without the need to fire up the GUI). However, the more transformations you undertake, the more errors in the conversion you are likely to introduce. Obviously, this then causes QA issues and will increase your workload per transform required.

Another real problem with MS Word versions before .docx, is that .docx is transparent, actually is just XML. So you can view what you are dealing with. Versions before this were horrible binaries – a big clump of ones and zeros – and after that a bunch of gunk. That same problem also exists when you use binaries like soffice (the Libreoffice binary for headless conversions) as it is also a big bucket of numbers. You can’t easily get your head into improving transformations with soffice unless you want to learn to etch code into your CPU with a protractor.

If you have to deal with MS Word at all, I recommend stipulating .docx as the accepted MS Word format. I am not a file type expert, far from it, but from people who do know a lot about file formats I know that .docx looks like it has been designed by a committee… and possibly, a committee whose members never spoke to each other. Additionally, Microsoft, being Microsoft, likes to bully people into doing things their way. .docx is a notable move away from that strategy, and does make it substantially easier to interoperate with other formats, however, there are some horrible gotchas like .docx having its own non-standard version of MathML. Yikes. So, life in the .docx lane is easier, but not necessarily as easy as it should be if we were all playing in the same sandbox like grownups.

I have tried many strategies for Word to HTML conversion. There are many open source solutions out there, but oddly, not as many good ones as you would hope. Recently I looked at these three rather closely:

- Calibre’s Python based ebook converter script

- OxGarage

- soffice (Libreoffice)

There are others…I can’t even remember which ones I have looked at in detail over the years. I have trawled Sourceforge and Github and Gitorious and other places. But the web is enormous these days and maybe there is just the oh-so-perfect solution that I have missed. If you know it then please email it to me, I’ll be ever so grateful (only Open Source solutions please!).

These three are all good solutions, but at the end of the day, I like OxGarage. I won’t go into too much detail about all of them but a quick top-of-mind whys and why-nots would include:

- Calibre’s scripts are awesome and extendable if you know Python, however they don’t support MS MathML to ‘real’ MathML conversions. That’s a show stopper for me.

- On the good side, though, Calibre’s developer community is awesome, and they are heroes in this field and deserve support, so if you are a Python coder or dev shop then, by all means, please pitch in and help them improve their .docx to HTML transforms. The world will be a better place for it.

- soffice does an ok job but it’s a black box, who knows what magic is inside? It tends to make really complex HTML and it is also really heavy on your poor hardware. I have used it a lot but I’m not that big a fan.

- OxGarage…well…I love OxGarage, so I really recommend this option…

OxGarage was developed by a European Commission-funded project and then, as is common for these kinds of projects, it dried up and was left on a shelf. Along came Sebastian Rhatz, a guru of file transformation, big Open Source guy, and also a force behind the Text Encoding Initiative. Sebastian is also the head of Academic IT Sevices at Oxford University. The guy has credentials! Also, he’s a terribly nice and helpful guy. He has so much experience in this area I feel the trivialness of my questions about our .docx to HTML woes at PLOS… afraid he might absentmindedly swipe me out of the way like I was an inconsequential little midge.. but he’s such a nice chap, instead he invites midges out to lunch.

So, Sebastian picked up the Java code and added some better conversions. OxGarage is essentially a Java framework that manages multiple different types of conversions. You feed it and are fed from it by a simple web API. It doesn’t have the best error handling, but it does do a good job. The .docx to HTML conversion is multi-step. First, the .docx is converted to TEI – a very rich, complex markup, and then from TEI via XSL to HTML. That means that all you really need to worry about is tweaking the XSL to improve the transformation and that’s not too tricky. It could be argued that the TEI conversion is a redundant step. I think it is. But OxGarage works out of the box and does a pretty good job so we have adopted it for the project I am working on for PLOS, and we are happy with it. We have added some special (Open) Sauce but I’ll get to that later. We are using it and will shoot for more elegant solutions later (and we have designed a framework to make this an easy future path).

If you are looking for Word-to-HTML conversion tools, I recommend OxGarage. Im not saying it’s the optimal way to do things, but it will save you having to build another file conversion system from scratch, and from what I can tell from Sebastian, that would take considerable effort.

HTML to books

The other side of the tracks is the conversion of the HTML you have into a book file format. We live in a rather tangled semantic world when it comes to this part of the toolchain. Firstly, it’s hard to know what a book file format actually is these days… on a normal day, I would say a book file format is a file format that can display a human readable structured narrative. Yikes. That’s not particularly helpful… Let’s just say for now that a book file format is – EPUB, book formatted PDF, HTML, and Mobi.

So, transforming from HTML to HTML sounds pretty easy. It is! The question is really how do you want your book to appear on the web? Make that decision first, and then build it. Since you are starting with HTML this should be rather easy and could be done in any programming language.

The next easiest is EPUB. EPUB contains the content in HTML files stored in a zip file with the .epub suffix. That is also easy to create and, depending on your programming language, there are plenty of libraries to help you do this. So moving on…

Mobi. Ok.. mobi is a proprietary format and rather horrible. It contains some HTML, some DB stuff… I don’t know… a bit of bad magic, frogs legs… that kind of thing. My recommendation is to first create your EPUB and then use Calibre’s awesome ebook converter script to create the mobi on the backend. Actually, if you use this strategy, you get all the other Calibre output formats for free, including (groan) .docx if you need it. Honestly, go give those Calibre guys all your love, some dev time, and a bit of cash. They are making our world a whole lot easier.

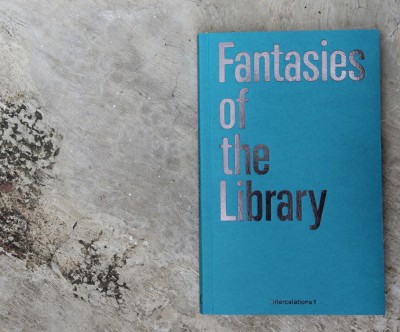

Ok… the holy grail… people still like paper books, and paper books are printed from PDF. Paper these days is a post-digital artifact. So first you need that awkward sounding book-formatted PDF.

Here there are an array of options and then there is this very exciting world that can open to you if you are willing to live a little on the bleeding edge…. I’m referring to CSS Regions… but let’s come back to that.

First, I want to say I am disappointed that some ‘Open Source’ projects use proprietary code for HTML-to-PDF conversion. That includes Press Books and Wikipedia. Wikipedia is re-tooling their entire book-formatted-PDF conversion process to be based on LaTeX and that is an awesome decision. However, right now they use the proprietary PrinceML as does Press Books. I like both projects, but I get a little disheartened when projects with a shared need don’t put some effort into an Open Source solution for their toolchain.

All book production platforms that produce paper books need an HTML-to-PDF renderer to do the job. If it is closed source then I think it needs to be stated that the project is partially Open Source. I’m a stickler for this kind of stuff but also, I am saddened that adoption of proprietary components stops the effort to develop the Open Source solutions we need, while simultaneously enabling proprietary solutions to gain market dominance – which, if you follow the logic through, traps the effort to develop a competitive Open Source solutions in a vicious circle. I wish that more people would try, like the Wikimedia Foundation is trying, to break that cycle.

The browser as renderer

There is one huge Open Source hero in this game. Jacob Truelson. He created WKHTMLTOPDF when he was a university tutor because he wanted his students to be able to write in HTML and give him nicely formatted PDF for evaluation. So he grabbed a headless Webkit, added some QT magic, some tweaks, and made a command line application that converts HTML to book-formatted PDF. We used it in the early days of FLOSS Manuals and it is still one of the renderer choices in the Booktype file conversion suite (Objavi). It was particularly helpful when we needed to produce books in Farsi which contain right to left text. No HTML to PDF renderer supported this at the time except WKHTMLTOPDF because it was based on a browser engine that had RTL support built in.

Some years later WKHTMLTOPDF was floundering, mainly because Jacob was too busy, and I tried to help create a consortium around the project to find developers and finance. However I didn’t have the skills, and there was little interest. Thankfully the problem solved itself over time, and WKHTMLTOPDF is now a thriving project and very much in demand.

WKHTMLTOPDF really does a lot of cool stuff, but more than this, I firmly believe the approach is the right approach. The application uses a browser to render the PDF…that is a HUGE innovation and Jacob should be recognised for it. What this means is – if you are making your book in HTML in the browser, you have at your fingertips lots of really nice tools like CSS and JavaScript. So, for example, you can style your book with CSS or add javaScript to support the rendering of Math, or use typography JavaScripts to do cool stuff… When you render your book to PDF with a browser, you get all that stuff for free. So your HTML authoring environment and your rendering environment are essentially the same thing… I can’t tell you how much that idea excites me. It is just crazy! This means that all those nice JavaScripts you used, and all that nice CSS which gave you really good looking content in the browser will give you the same results when rendered to PDF. This is the right way to do it and there is even more goodness to pile on, as this also means that your rendering environment is standards-based and open source…

Awesome. This is the future. And the future is actually even brighter for this approach than I have stated. If you are looking to create dynamic content – let’s say cool little interactive widgets based on the incredible tangle! Library – for ebooks (including web-based HTML) … if you use a browser to render the PDF you can actually render the first display state of the dynamic content in your PDF. So, if you make an interactive widget, in the paper book you will see the ‘frozen’ version, and in the ebook/HTML version you get the dynamic version – without having to change anything. I tested this a long time ago and I am itching to get my teeth into designing content production tools to do this.

So many things to do. You can get an idea how it works by visiting that Tangle link above… try the interactive widgets in the browser, and then just try printing to PDF using the browser… you can see the same interactive widgets you played with also print nicely in a ‘static’ state. That gets the principle across nicely.

So a browser-based renderer is the right approach, and Prince, which is, it must be pointed out, partly owned by Håkon Wium Lie, is trying to be a browser by any other name. It started with HTML and CSS to PDF conversion and now…oo!… they added Javascript… so…are they a browser? No? I think they are actually building a proprietary browser to be used solely as a rendering engine. It just sounds like a really bad idea to me. Why not drop that idea and contribute to an actual open source browser and use that. And those projects that use Prince, why not contribute to an effort to create browser-based renderers for the book world? It’s actually easier than you think. If you don’t want to put your hands into the innards of WebKit, then do some JavaScript and work with CSS Regions (see below).

This brings us to another part of the browser-as-renderer story, but first I think two other projects need calling out for thanks. Reportlab for a long time was one of the only command line book-formatted-PDF rendering solutions. It was proprietary but had a community license. That’s not all good news, but at least they had one foot in the Open Source camp. However, what really made Reportlab useful was Dirk Holtwick’s Pisa project that provided a layer on top of Reportab so you could convert HTML to book-formatted-PDF.

The bleeding edge

So, to the bleeding edge. CSS Regions is the future for browser-based PDF rendering of all kinds. Interestingly Håkon Wium Lie has said, in a very emphatic way, that CSS Regions is bad for the web…perhaps he means bad for the PrinceML business model? I’m not sure, I can only say he seemed to protest a little too much. As a result, Google pulled CSS regions out of Chrome. Argh.

However CSS Regions are supported in Safari, and in some older versions of Chrome and Chromium (which you can still find online if you snoop around). Additionally, Adobe has done some awesome work in this area (they were behind the original implementation of CSS Regions in WebKit – the browser engine that used to be behind Chrome and which is still used by Safari). Adobe built the CSS Regions polyfil – a javaScript that plays the same role as built-in CSS regions.

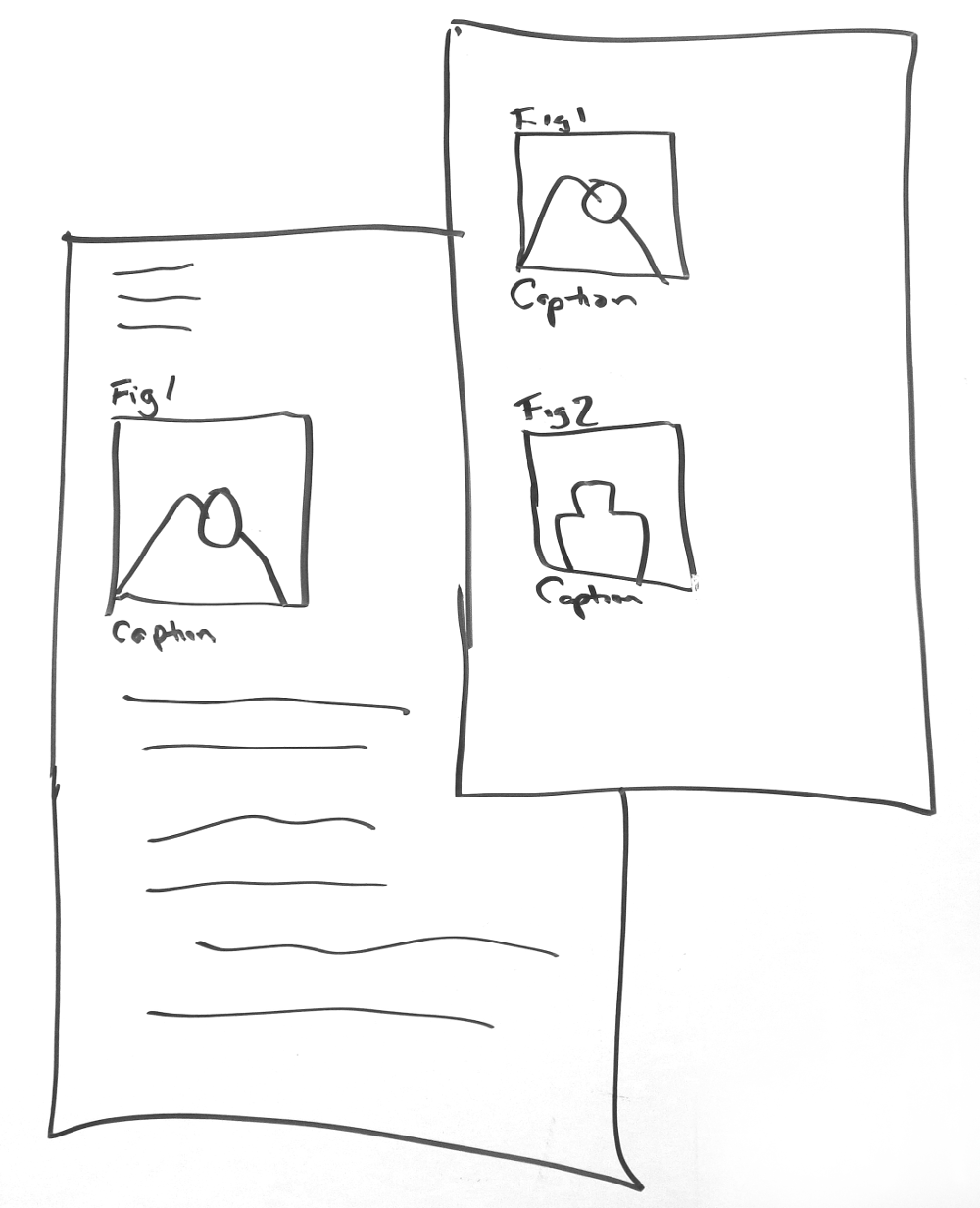

When CSS regions came online in early 2012, Remko Siemerink and I experimented with CSS Regions at an event at the Sandberg (Amsterdam) for producing book- formatted PDF. I’m really happy to see that one of these experiments is still online (NB this needs to be viewed in a browser supporting CSS Regions).

It was obviously the solution for pagination on the web, and once you can paginate in the browser, you can convert those web pages to PDF pages for printing. This was the step needed for a really flexible browser-based book-formatted-PDF rendering solution. It must be pointed out however, that it’s not just a good solution for books… at BookSprints.net we use CSS Regions to create a nicely formatted and paginated form in the browser to fill out client details. Then we print it out to PDF and send it…

Adobe is on to this stuff. They seem to believe that the browser is the ‘design surface’ of the future. Which seems to be why they are putting so much effort into CSS Regions. Im not a terribly big fan of InDesign and proprietary Adobe strategies and products, but credit where credit is due. Without Adobe CSS Regions ^^^ would just be an idea, and they have done it all under open source licenses (according to Alan Stearns from Adobe, the Microsoft and IE teams also contributed to this quite substantially).

At the time CSS Regions were inaugurated, I was in charge of a small team building Booktype in Berlin, and we followed on from Remko’s work, grabbed CSS Regions, and experimented with a JavaScript book renderer. In late 2012, book.js was born (it was a small team but I was lucky enough to be able to dedicate one of my team, Johannes Wilm, to the task) and it’s a JavaScript that leverages CSS Regions to create paginated content in the browser, complete with a table of contents, headers, footers, left-right margin control, front matter, title pages…etc… we have also experimented with adding contenteditable to the mix so you can create paginated content, tweak it by editing it directly in the browser, and outputting to PDF. It works pretty well and I have used it to produce 40 or 50 books, maybe more. The Fiduswriter team has since forked the code to pagination.js which I haven’t looked at too closely yet as I’m quite happy with the job book.js does.

CSS Regions is the way to go. It means you can see the book in the browser and then print to PDF and get the exact same results. It needs some CSS wizardry to get it right, but when you get it right, it just works. Additionally, you can compile a browser in a headless state and run it on the command line if you want to render the book on the backend.

Wrapping it all up

There is one part of this story left to be told. If you are going to go down this path, I thoroughly recommend you create an architecture that will manage all these conversion processes and which is relatively agnostic to what is coming in and going out. For Booktype, Douglas Bagnall and Luka Frelih built the original Objavi, which is a Python based standalone system that accepts a specially formatted zip file (booki.zip) and outputs whatever format you need. It manages this by an API, and it serves Booktype pretty well. Sourcefabric still maintains it and it has evolved to Objavi 2.

However, I don’t think it’s the optimal approach. There are many things to improve with Objavi, possibly the most important is that EPUB should be the file format accepted, and then after the conversion process takes place EPUB should be returned to the book production platform with the assets wrapped up inside. If you can do this, you have a standards-based format for conversion transactions, and then any project that wants to can use it. More on this in another post. Enough to say that the team at PLOS are building exactly this and adding on some other very interesting things to make ‘configurable pipelines’ that might take format X though an initial conversion, through a clean up process, and then a text mining process, stash all the metadata in the EPUB and return it to the platform. But that’s a story for another day…